Wan 2.5 Image to Video

Wan2.2 14b - Image to Video w/ Optional Last Frame

Nano Banana Pro Text-to-Image: Gemini 3 Pro

Z-Image Turbo - Text to Image w/ Optional Image Input (Image to Image)

Wan 2.6 Reference to Video

floyoofficial

2.8k

API

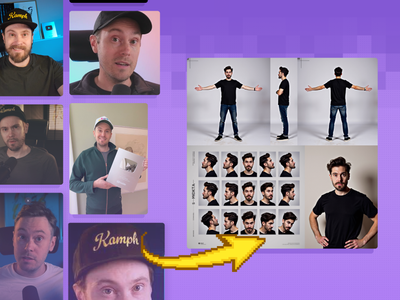

Flux

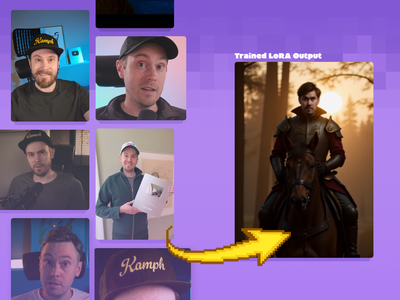

LoRa Training

FLUX is great at generating images, but locking in a specific aesthetic or character is easier with a LoRA. Here's how to create your own.

Fast LoRA Training for Flux via Floyo API

FLUX is great at generating images, but locking in a specific aesthetic or character is easier with a LoRA. Here's how to create your own.

floyoofficial

2.4k

Image to Video

Wan

Created by @vrgamedevgirl on Civitai, please support the original creator!

Wan2.1 FusionX Image2Video

Created by @vrgamedevgirl on Civitai, please support the original creator!

360

Image2Video

Wan2.1

See an image of a character spin 360 degrees. Key Inputs Image reference: Use any JPG or PNG showing your subject clearly Width & height: Default resolution settings are noted: Default image resize resolution works best for portrait images, if the image is landscape change from 480x832 to 832x480 Prompt: Follow example format: The video shows (describe the subject), performs a r0t4tion 360 degrees rotation. Denoise: The amount of variance in the new image. Higher has more variance. File Format: H.264 and more

Image to Character Spin

See an image of a character spin 360 degrees. Key Inputs Image reference: Use any JPG or PNG showing your subject clearly Width & height: Default resolution settings are noted: Default image resize resolution works best for portrait images, if the image is landscape change from 480x832 to 832x480 Prompt: Follow example format: The video shows (describe the subject), performs a r0t4tion 360 degrees rotation. Denoise: The amount of variance in the new image. Higher has more variance. File Format: H.264 and more

Create Character LoRA Dataset using Qwen Image Edit 2509

Qwen Image Edit 2509 Face Swap and Inpainting

floyoofficial

1.8k

Flux Kontext

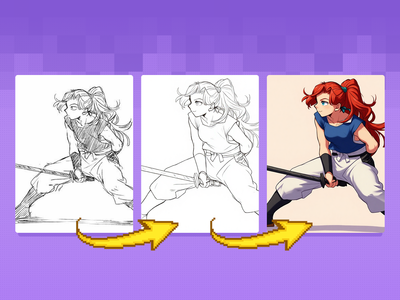

Lineart

Previz

Sketch to Image

Quickly convert rough sketches into polished lineart and colorized concepts. Ideal for early storyboards, character designs, scene planning, and other visual explorations.

Flux Kontext Sketch to LineArt + Color Previz

Quickly convert rough sketches into polished lineart and colorized concepts. Ideal for early storyboards, character designs, scene planning, and other visual explorations.

Animation

Filmmaking

Flux

Game Development

LoRA

Text to Image

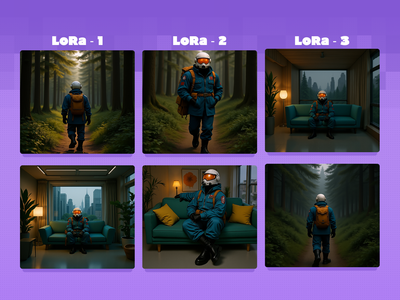

Test and compare multiple epochs of a character LoRA side by side with preset prompts When training a LoRA, you'll usually have a few checkpoints throughout the process to test. This workflow lets you load up to 4 LoRAs to test side by side, making it easier to determine which one is right for you! Key Inputs: LoRA Loaders: Load each LoRA epoch for the same character in up to 4 groups. Groups Bypasser: Enable/disable groups as needed. If you only have 2 epochs to test, disable the back 2 groups! Triggerword: Simply add the trigger word for your LoRA and it will auto-fill in the default prompts. Leave blank if you're using your own custom prompts that include the trigger word. LoRA Testing Prompts: Default prompts work well to get an idea of how your character will look in different situations, but feel free to replace them with your own prompts (max 4).

Flux Character LoRA Test and Compare

Test and compare multiple epochs of a character LoRA side by side with preset prompts When training a LoRA, you'll usually have a few checkpoints throughout the process to test. This workflow lets you load up to 4 LoRAs to test side by side, making it easier to determine which one is right for you! Key Inputs: LoRA Loaders: Load each LoRA epoch for the same character in up to 4 groups. Groups Bypasser: Enable/disable groups as needed. If you only have 2 epochs to test, disable the back 2 groups! Triggerword: Simply add the trigger word for your LoRA and it will auto-fill in the default prompts. Leave blank if you're using your own custom prompts that include the trigger word. LoRA Testing Prompts: Default prompts work well to get an idea of how your character will look in different situations, but feel free to replace them with your own prompts (max 4).

ComfyUI Flux LoRA Trainer

Created by @Kijai on Github, please support the original creator!

Flux Dev - Text to Image w/ Optional Image Input

Z-Image Turbo Controlnet 2.1 Image to Image

Qwen Image Edit - Edit Image Easily

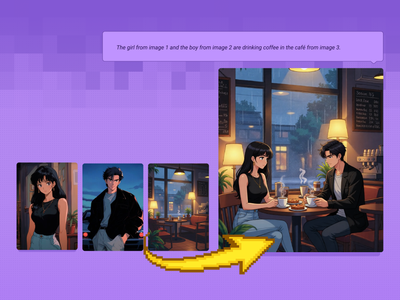

Qwen Image Edit 2509: Combine Multiple Images Into One Scene for Fashion, Products, Poses & more

floyoofficial

2.1k

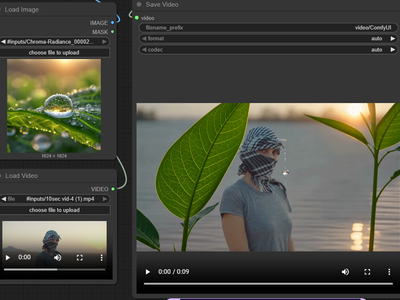

Outpainting

Video to Video

Wan

Wan VACE video outpainting invites you to break free from the limits of the frame and explore endless creative possibilities.

Wan2.1 and VACE for Video to Video Outpainting

Wan VACE video outpainting invites you to break free from the limits of the frame and explore endless creative possibilities.

API

Flux

Text to Image

Start with a prompt, and get a different render from a range of unique models at the same time.

Multi-Image Flux Ultra, Pro, Dev, Recraft+

Start with a prompt, and get a different render from a range of unique models at the same time.

Flux

Kontext

Sketch to Image

Bring your sketches to life in full color with Flux Kontext! Key Inputs Load Image – Upload the sketch you want to transform. Prompt – Describe the desired output style, such as: “Render this sketch as a realistic photo” or “Turn this sketch into a watercolor painting.”

Flux Kontext - Sketch to Image

Bring your sketches to life in full color with Flux Kontext! Key Inputs Load Image – Upload the sketch you want to transform. Prompt – Describe the desired output style, such as: “Render this sketch as a realistic photo” or “Turn this sketch into a watercolor painting.”

SeedVR2 Upscale: Upscale Your Image to Extreme Clarity

floyoofficial

2.7k

Filmmaking

LTX 2

LTX 2 Fast

Open Source

Text2Video

Videography

A text video model using LTX 2

LTX 2 19B Fast for Text to Video

A text video model using LTX 2

Wan2.2 Animate Character

floyoofficial

2.1k

Controlnet

Flux

Video2Video

Wan2.1

Create a new video by restyling an existing video with a reference image.

Wan2.1 Fun Control and Flux for V2V Restyle

Create a new video by restyling an existing video with a reference image.

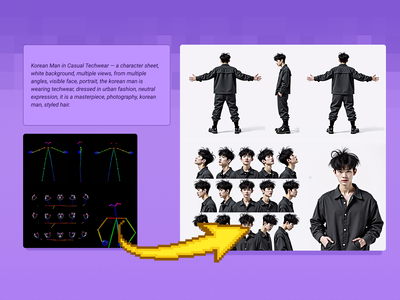

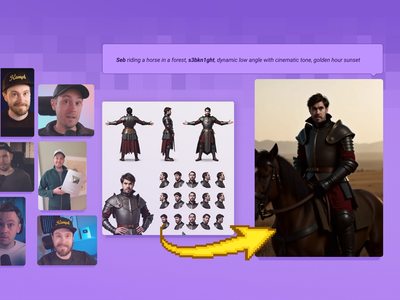

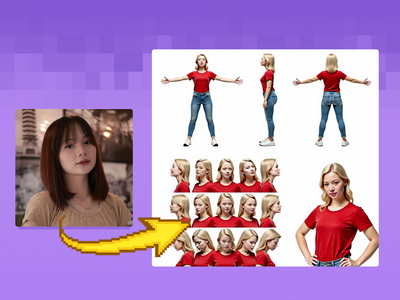

Character Sheet

Controlnet

Flux

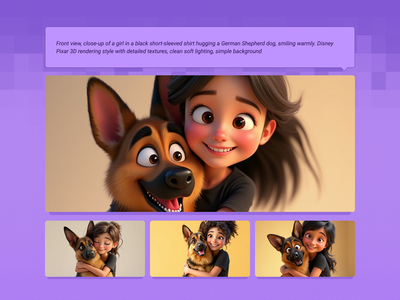

Create a character and a range of consistent outputs suitable for establishing character consistency, training a model, and ensuring consistency throughout multiple scenes. Key Inputs Image reference: Use the included pose sheet to show range of positions Prompt: as descriptive a prompt as possible

Flux Text to Character Sheet

Create a character and a range of consistent outputs suitable for establishing character consistency, training a model, and ensuring consistency throughout multiple scenes. Key Inputs Image reference: Use the included pose sheet to show range of positions Prompt: as descriptive a prompt as possible

floyoofficial

1.0k

Recammaster

Video to Video

Wan

Adjust the camera angle of an existing video, like magic.

Wan2.1 and RecamMaster for V2V Camera Control

Adjust the camera angle of an existing video, like magic.

VibeVoice Text to Speech Single Speaker

Z-Image Turbo + DyPE + SeedVR2 2.5 + TTP 16k reso

API

Floyo API

Image to Video

Seedance 1.5 Pro

Draft mode lets you first experiment at a low cost by generating 480p draft videos

Seedance 1.5 Pro with Draft Mode

Draft mode lets you first experiment at a low cost by generating 480p draft videos

Flux

Flux Kontext

Image2Image

kontext

panorama

Flux Kontext 360° Workflow - Seamless Panorama Generation Input: Simply upload an image in the "Load Image from Outputs" node Output: A 360° Panoramic image

Flux Kontext and HD360 LoRA for 360 Degree View

Flux Kontext 360° Workflow - Seamless Panorama Generation Input: Simply upload an image in the "Load Image from Outputs" node Output: A 360° Panoramic image

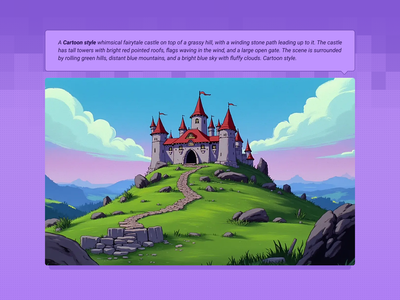

Flux

Text2Image

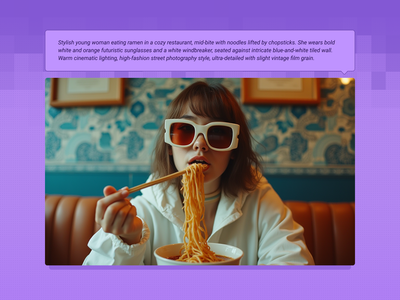

Create original images using only text prompts, which can be simple or elaborate. Key Inputs Prompt: as descriptive a prompt as possible Width & height: Optimal resolution settings are noted

Flux Text to Image

Create original images using only text prompts, which can be simple or elaborate. Key Inputs Prompt: as descriptive a prompt as possible Width & height: Optimal resolution settings are noted

AnimateDiff

Control Image

HotshotXL

SDXL

Video2Video

Breathe life into a character from an image reference using motion reference from a video. Key Inputs Image reference: Use any JPG or PNG showing your subject clearly and the style of your shot Load Video: Use any Mp4 that you would like to use for motion reference

Video to Video with Control Image

Breathe life into a character from an image reference using motion reference from a video. Key Inputs Image reference: Use any JPG or PNG showing your subject clearly and the style of your shot Load Video: Use any Mp4 that you would like to use for motion reference

Ace+

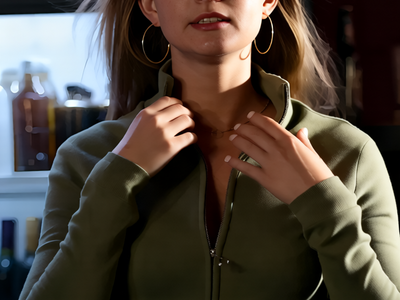

Fashion

Flux

Image to Image

Virtual Try-on

Virtual Outfit Try-On with Auto Segmentation Try virtual clothing on any subject using Flux Dev, Ace Plus, and Redux, with automatic segmentation. Great for concept previews, fashion mockups, or character styling. Key Inputs Outfit: Load the outfit image you want to apply. Make sure it's high quality — visible artifacts or distortions may carry over into the final result. Actor: Add the subject or character you want to dress. Ideally, use a clear, front-facing image. Human Parts Ultra: Choose which parts of the body the clothing should apply to. For example, for a long-sleeve shirt, select: torso, left arm, and right arm. This helps the model align the clothing properly during generation. Prompt: Default value works for most outfits, however you may try to adjust it to describe the desired outfit.

Flux Outfit Transfer

Virtual Outfit Try-On with Auto Segmentation Try virtual clothing on any subject using Flux Dev, Ace Plus, and Redux, with automatic segmentation. Great for concept previews, fashion mockups, or character styling. Key Inputs Outfit: Load the outfit image you want to apply. Make sure it's high quality — visible artifacts or distortions may carry over into the final result. Actor: Add the subject or character you want to dress. Ideally, use a clear, front-facing image. Human Parts Ultra: Choose which parts of the body the clothing should apply to. For example, for a long-sleeve shirt, select: torso, left arm, and right arm. This helps the model align the clothing properly during generation. Prompt: Default value works for most outfits, however you may try to adjust it to describe the desired outfit.

Video to Video with Camera Control with Wan

Adjust the camera angle of an existing video, like magic.

VEO3 Future of Video Creation

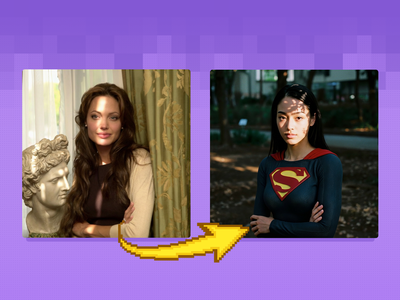

ace

face

face swap

faceswap

face swapper

sebastian kamph

swap

Face swapper built with Flux and ACE++. Works with added details too, like hats, jewelry. Smart features, use natural language.

SMART FACE SWAPPER - Ace++ Flux Face swap

Face swapper built with Flux and ACE++. Works with added details too, like hats, jewelry. Smart features, use natural language.

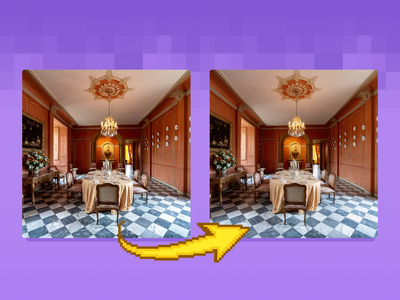

Controlnet

Flux

Image

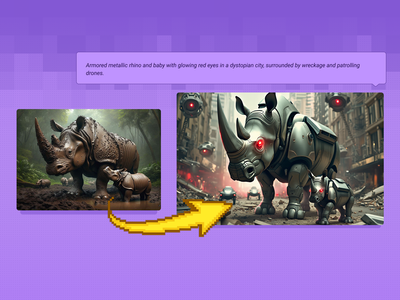

Transform your images into something completely new, yet retaining specific details and composition from your original using flexible controls. Key Inputs Image reference: Use any JPG or PNG showing your subject clearly Prompt: as descriptive a prompt as possible Denoise Strength: The amount of variance in the new image. Higher has more variance. Width & height: Try and match the aspect ratio of the original if possible.

Image to Image with Flux ControlNet

Transform your images into something completely new, yet retaining specific details and composition from your original using flexible controls. Key Inputs Image reference: Use any JPG or PNG showing your subject clearly Prompt: as descriptive a prompt as possible Denoise Strength: The amount of variance in the new image. Higher has more variance. Width & height: Try and match the aspect ratio of the original if possible.

Text to Image with Multi-LoRA

Create consistent images with multiple LoRA models.

Flux

LoRa

Text2Image

Create an image from a trained AI model of something specific ( a specific figure, outfit, art style, product etc) to ensure specific details within.

Text to Image + LoRA model

Create an image from a trained AI model of something specific ( a specific figure, outfit, art style, product etc) to ensure specific details within.

Wan2.6 Image to Video

API

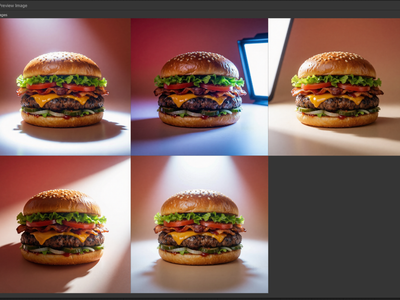

Ecommerce

Image2Image

Nano Banana Pro

Product Ads

Create grids of different angles for your ecommerce products.

Nano Banana Pro for Multi Grid View of Product Ads

Create grids of different angles for your ecommerce products.

Z-Image Turbo with Controlnet 2.1 and Qwen VLM for Creating Accurate Variety of Images

Seedance I2V: Image to Video in Minutes

Image-to-Video with Reference Video (Prompt-Based Camera Rotation)

floyoofficial

1.3k

Animation

Filmmaking

Image to Video

Lipsync

Marketing

Multitalk

Wan2.1

Turn any portrait - artwork, photos, or digital characters - into speaking, expressive videos that sync perfectly with audio input. MultiTalk handles lip movements, facial expressions, and body motion automatically.

Wan2.1 FusionX and MultiTalk - Image to Video

Turn any portrait - artwork, photos, or digital characters - into speaking, expressive videos that sync perfectly with audio input. MultiTalk handles lip movements, facial expressions, and body motion automatically.

Multi-Angle LoRA and Qwen Image Edit 2509: Unlocking Dynamic Camera Control for Your Images

HunyuanVideo Foley: Create a Lifelike Sound

Character + Outfit → High-End Editorial Shoot

floyoofficial

1.0k

MMaudio

Video to Video

Generate synchronized audio with a given video input. It can be combined with video models to get videos with audio.

MMAudio: Video to Synced Audio

Generate synchronized audio with a given video input. It can be combined with video models to get videos with audio.

Image

Inpaint

LoRa

Change specific details on just a portion of the image for inpainting or Erase & Replace, adding a LoRA for extra control.

Image Inpainting with LoRA

Change specific details on just a portion of the image for inpainting or Erase & Replace, adding a LoRA for extra control.

Flux

Image

Inpaint

Change specific details on just a portion of the image, sometimes known as inpainting or Erase & Replace. Key Inputs Image reference: Use any JPG or PNG showing your subject clearly Masking tools: Right-click to reveal the masking tool option, and create a mask of the desired area to inpaint Prompt: as descriptive a prompt as possible to help guide what you would like replaced in the masked area

Image Inpainting

Change specific details on just a portion of the image, sometimes known as inpainting or Erase & Replace. Key Inputs Image reference: Use any JPG or PNG showing your subject clearly Masking tools: Right-click to reveal the masking tool option, and create a mask of the desired area to inpaint Prompt: as descriptive a prompt as possible to help guide what you would like replaced in the masked area

Controlnet

SD1.5

Turn your scribbles into a beautiful image with only a drawing tool and a text prompt. Key Inputs Scribble: Create your scribble with the painting and design tools Prompt: as descriptive a prompt as possible Width & height: Optimal resolution settings are noted ControlNet Strength: The amount of adherence to the original image. Higher has more adherence. Start Percent: The point in the generation process where the control starts exerting influence. (Have it start later, to let AI imagine first.) End Percent: The point in the generation process where the control stops exerting influence. (Have it end sooner, to let AI finish it off with some variation.)

Scribble to Image

Turn your scribbles into a beautiful image with only a drawing tool and a text prompt. Key Inputs Scribble: Create your scribble with the painting and design tools Prompt: as descriptive a prompt as possible Width & height: Optimal resolution settings are noted ControlNet Strength: The amount of adherence to the original image. Higher has more adherence. Start Percent: The point in the generation process where the control starts exerting influence. (Have it start later, to let AI imagine first.) End Percent: The point in the generation process where the control stops exerting influence. (Have it end sooner, to let AI finish it off with some variation.)

Start/End Frame Multi-Video via Floyo API

Compare between Luma Dream Machine and Kling Pro 1.6 via Fal API

Image2Video

Start and end frame

Wan2.1

Used for image to video generation, defined by the first frame and end frame images.

Wan2.1 Start & End Frame Image to Video

Used for image to video generation, defined by the first frame and end frame images.

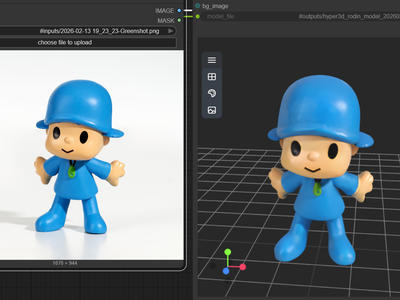

3D View

Animation

Architecture

Filmmaking

Game Development

Hunyuan 3D

Hunyuan3D

Image to 3D

A simple workflow to create a detailed & textured 3D model from a reference image.

Image to 3D with Hunyuan3D

A simple workflow to create a detailed & textured 3D model from a reference image.

API

Hunyuan

LORA Training

Hunyuan is great at generating videos, but locking in a specific aesthetic or character is easier with a LoRA. Here's how to create your own. Quick start recipe Upload a Zip file of curated images + captions. Enable is_style and include a unique trigger phrase. Compare checkpoints with a range of steps to find the sweet spot. Training overview This workflow trains a Hunyuan LoRa using the Fal.ai API. Since it's not training in ComfyUI directly, you can run this workflow on a Quick machine. Processing takes 5-10 minutes with the default settings. Open the ComfyUI directory and navigate to the ../input/ folder, from there you will create a new folder and upload your image data set there. If you create a new folder named "Test", the path should look like: ../user_data/comfyui/input/Test The default settings work very well – if you would like to add a trigger word for your LoRa, you can do that in the Trigger Word field. Once finished, a new URL will appear in your Preview Text node. Copy the URL and paste the path directly to your ../comfyui/models/LoRA/ by using the Upload by URL feature in the file browser. Prepping your dataset Fewer, ultra‑high‑res (≈1024×1024+) images beat many low‑quality ones. Every image must clearly represent the style or individual and be artifact‑free. For people, aim for at least 10-20 images in different background (5 headshots, 5 wholebody, 5 halfbody, 5 in other scene) Captioning Give the style a unique trigger phrase (so as not be confused with a regular word or term). For better prompt control, add custom captions that describe content only—leave style cues to the trigger phrase. Create accompanying .txt files with the same name as the image its describing. If you do add custom captions, be sure to turn on is_style to skip auto‑captioning. It is set to off by default. Training steps The default is set to around 2000, but you can train multiple checkpoints (e.g., 500, 1000, 1500, 2000) and pick the one that balances style fidelity with prompt responsiveness. Too few steps: the character becomes less realistic or the style fades. Too many steps: model overfits and stops obeying prompts. Output Path Will be a URL in the Preview Text Node. Copy the URL and paste the path directly to your ../comfyui/models/LoRA/ by using the Upload by URL feature in the file browser.

LoRA Training Video with Hunyuan

Hunyuan is great at generating videos, but locking in a specific aesthetic or character is easier with a LoRA. Here's how to create your own. Quick start recipe Upload a Zip file of curated images + captions. Enable is_style and include a unique trigger phrase. Compare checkpoints with a range of steps to find the sweet spot. Training overview This workflow trains a Hunyuan LoRa using the Fal.ai API. Since it's not training in ComfyUI directly, you can run this workflow on a Quick machine. Processing takes 5-10 minutes with the default settings. Open the ComfyUI directory and navigate to the ../input/ folder, from there you will create a new folder and upload your image data set there. If you create a new folder named "Test", the path should look like: ../user_data/comfyui/input/Test The default settings work very well – if you would like to add a trigger word for your LoRa, you can do that in the Trigger Word field. Once finished, a new URL will appear in your Preview Text node. Copy the URL and paste the path directly to your ../comfyui/models/LoRA/ by using the Upload by URL feature in the file browser. Prepping your dataset Fewer, ultra‑high‑res (≈1024×1024+) images beat many low‑quality ones. Every image must clearly represent the style or individual and be artifact‑free. For people, aim for at least 10-20 images in different background (5 headshots, 5 wholebody, 5 halfbody, 5 in other scene) Captioning Give the style a unique trigger phrase (so as not be confused with a regular word or term). For better prompt control, add custom captions that describe content only—leave style cues to the trigger phrase. Create accompanying .txt files with the same name as the image its describing. If you do add custom captions, be sure to turn on is_style to skip auto‑captioning. It is set to off by default. Training steps The default is set to around 2000, but you can train multiple checkpoints (e.g., 500, 1000, 1500, 2000) and pick the one that balances style fidelity with prompt responsiveness. Too few steps: the character becomes less realistic or the style fades. Too many steps: model overfits and stops obeying prompts. Output Path Will be a URL in the Preview Text Node. Copy the URL and paste the path directly to your ../comfyui/models/LoRA/ by using the Upload by URL feature in the file browser.

Character Sheet

Image to Image

SDXL

Generate a character sheet with multiple angles from a single input image as reference. Key Inputs Image reference: Use any JPG or PNG showing your subject clearly. If you're trying to create a full body output, a full body input must be provided.

Image to Character Sheet

Generate a character sheet with multiple angles from a single input image as reference. Key Inputs Image reference: Use any JPG or PNG showing your subject clearly. If you're trying to create a full body output, a full body input must be provided.

floyoofficial

2.2k

Text2Image

Z-Image

Z-image-base

Create sunning images using z-image base model (non distlled).

Z-Image Base for Text to Image

Create sunning images using z-image base model (non distlled).

fx-integration

image-to-image

qwen

reference-image

upscaling

video-conditioning

wan21-funcontrol

Vertical Video FX Inserter - Qwen + Wan 2.1 FunControl

DyPe and Z-Image Turbo for High Quality Text to Image

SeedVR2 and TTP Toolset 8k Image Upscale

Flux 2 Text-to-Image Generation

LoRa

Text2Video

Wan2.1

Generate a high-quality video from a text prompt and add in a LoRA for extra control over character or style consistency. Key Inputs Prompt: as descriptive a prompt as possible Load LoRA: Load your reference model here Width & height: Optimal resolution settings are noted File Format: H.264 and more

Text to Video and Wan with optional LoRA

Generate a high-quality video from a text prompt and add in a LoRA for extra control over character or style consistency. Key Inputs Prompt: as descriptive a prompt as possible Load LoRA: Load your reference model here Width & height: Optimal resolution settings are noted File Format: H.264 and more

Vertical Video Light & Mood Shift

FlashVSR Upscale Your Videos Instantly

Vertical Video FX Insterter / Element Pass with Seedream + Wan

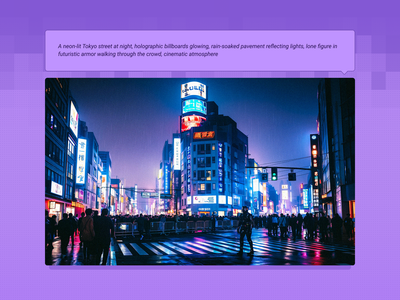

text2image

Wan2.1

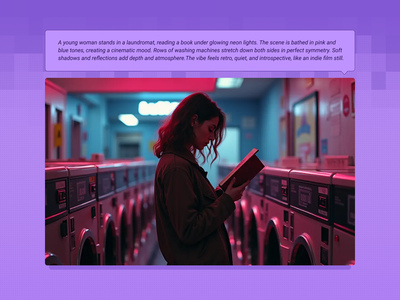

Created by @yanokusnir on Reddit, please support the original creator! https://www.reddit.com/r/StableDiffusion/comments/1lu7nxx/wan_21_txt2img_is_amazing/ If this is your workflow, please contact us at team@floyo.ai to claim it! Original post from the creator: Hello. This may not be news to some of you, but Wan 2.1 can generate beautiful cinematic images. I was wondering how Wan would work if I generated only one frame, so to use it as a txt2img model. I am honestly shocked by the results. All the attached images were generated in fullHD (1920x1080px) and on my RTX 4080 graphics card (16GB VRAM) it took about 42s per image. I used the GGUF model Q5_K_S, but I also tried Q3_K_S and the quality was still great. The only postprocessing I did was adding film grain. It adds the right vibe to the images and it wouldn't be as good without it. Last thing: For the first 5 images I used sampler euler with beta scheluder - the images are beautiful with vibrant colors. For the last three I used ddim_uniform as the scheluder and as you can see they are different, but I like the look even though it is not as striking. :) Enjoy.

Wan 2.1 Text2Image

Created by @yanokusnir on Reddit, please support the original creator! https://www.reddit.com/r/StableDiffusion/comments/1lu7nxx/wan_21_txt2img_is_amazing/ If this is your workflow, please contact us at team@floyo.ai to claim it! Original post from the creator: Hello. This may not be news to some of you, but Wan 2.1 can generate beautiful cinematic images. I was wondering how Wan would work if I generated only one frame, so to use it as a txt2img model. I am honestly shocked by the results. All the attached images were generated in fullHD (1920x1080px) and on my RTX 4080 graphics card (16GB VRAM) it took about 42s per image. I used the GGUF model Q5_K_S, but I also tried Q3_K_S and the quality was still great. The only postprocessing I did was adding film grain. It adds the right vibe to the images and it wouldn't be as good without it. Last thing: For the first 5 images I used sampler euler with beta scheluder - the images are beautiful with vibrant colors. For the last three I used ddim_uniform as the scheluder and as you can see they are different, but I like the look even though it is not as striking. :) Enjoy.

SeC Video Segmentation: Unleashing Adaptive, Semantic Object Tracking

character replacement

character swap

image to video

masking

Points Editor

vertical video

Wan2.2 Animate

WanAnimateToVideo

Vertical Video Character Face & Actor Swap (Wan 2.2 Animate)

Flux

Flux.2 Klein

Image2image

Unified workflow: one model for text‑to‑image, image‑to‑image, and image editing

FLUX.2 Klein 9B for Image Editing

Unified workflow: one model for text‑to‑image, image‑to‑image, and image editing

Animation

Image to Video

Kling 2.6

Create an excellent for movement for your characters using Kling 2.6 Standard Motion Control

Kling 2.6 Standard Motion Control

Create an excellent for movement for your characters using Kling 2.6 Standard Motion Control

Flux

Image

UltimateSD

Upscale

A simple workflow to enlarge & add detail to an existing image. Key Inputs Image: Use any JPG or PNG Upscale by: The factor of magnification Denoise: The amount of variance in the new image. Higher has more variance.

Flux Image Upscaler with UltimateSD

A simple workflow to enlarge & add detail to an existing image. Key Inputs Image: Use any JPG or PNG Upscale by: The factor of magnification Denoise: The amount of variance in the new image. Higher has more variance.

Flux

Image

Redux

Create variations of a given image, or restyle them. It can be used to refine, explore, or transform ideas and concepts. Key Inputs Image reference: Use any JPG or PNG showing your subject clearly Width & height: In pixels Prompt: as descriptive a prompt as possible Strength (step 5: value): Strength of redux model, play around with the value to increase or decrease the amount of variation

Image Redux with Flux

Create variations of a given image, or restyle them. It can be used to refine, explore, or transform ideas and concepts. Key Inputs Image reference: Use any JPG or PNG showing your subject clearly Width & height: In pixels Prompt: as descriptive a prompt as possible Strength (step 5: value): Strength of redux model, play around with the value to increase or decrease the amount of variation

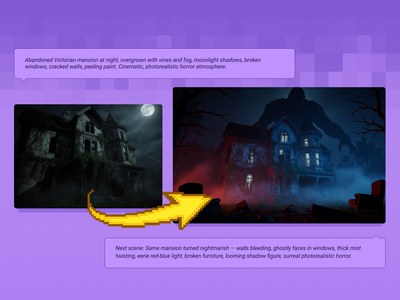

Qwen Image Edit 2509 + Flux Krea for Creating Next Scene

360 Degree Product Video Using Nano Banana Pro

Qwen Image Edit 2511 Restore Damage Old Photograph

Kling Omni One Video to Video Edit

Flux

Image

Outpaint

Extend your images out for a wider field of view or just to see more of your subject. Expand compositions, change aspect ratios, or add creative elements while maintaining consistency in style, lighting, and detail while seamlessly blending with the existing artwork. Enhance visuals, create immersive scenes, and repurpose images for different formats without losing their original essence. Key Inputs Image reference: Use any JPG or PNG showing your subject clearly Prompt: as descriptive a prompt as possible to describe the area you want to extend out to Left, Right, Top, Bottom: Amount of extension in pixels Feathering: Amount of radius around the original image in pixels that the AI generated outpainting will blend with the original

Flux Fill Dev Image Outpainting

Extend your images out for a wider field of view or just to see more of your subject. Expand compositions, change aspect ratios, or add creative elements while maintaining consistency in style, lighting, and detail while seamlessly blending with the existing artwork. Enhance visuals, create immersive scenes, and repurpose images for different formats without losing their original essence. Key Inputs Image reference: Use any JPG or PNG showing your subject clearly Prompt: as descriptive a prompt as possible to describe the area you want to extend out to Left, Right, Top, Bottom: Amount of extension in pixels Feathering: Amount of radius around the original image in pixels that the AI generated outpainting will blend with the original

Wan2.2 Fun Camera for Camera Control

Nano Banana Pro Edit Image to Image

Chroma 1 Radiance Text to Image

SRPO Next-Gen Text-to-Image

Seedance Text to Video: Create Stunning 1080p Videos Instantly

AniSora 3.2 and Wan2.2: Best Practices for Generating Smooth Character 3D Spin

SAM3 Image Segmentation

Wan2.1 + WanMOVE for Animating Movement using Trajectory Path

FantasyTalking

Image2Video

Lipsync

Wan2.1

Create high quality lipsync video from image inputs with Wan2.1 FantasyTalking Key Inputs Load Image: Select an image of a person with their face in clear view Load Audio: Choose audio file Frames: How many frames generated

Wan2.1 and FantasyTalking - Image2Video Lipsync

Create high quality lipsync video from image inputs with Wan2.1 FantasyTalking Key Inputs Load Image: Select an image of a person with their face in clear view Load Audio: Choose audio file Frames: How many frames generated

Hunyuan

LoRa

Text2Video

Integrate a custom model with your text prompt to create a video with a consistent character, style or element. Key Inputs Prompt: as descriptive a prompt as possible. Make sure to include the trigger word from your LoRA below Load LoRA: Load your reference model here Width & height: resolution settings are noted in pixels Guidance strength (CFG): Higher numbers adhere more to the prompt Flow Shift: For temporal consistency, adjust to tweak video smoothness.

Text to Video + Hunyuan LoRA

Integrate a custom model with your text prompt to create a video with a consistent character, style or element. Key Inputs Prompt: as descriptive a prompt as possible. Make sure to include the trigger word from your LoRA below Load LoRA: Load your reference model here Width & height: resolution settings are noted in pixels Guidance strength (CFG): Higher numbers adhere more to the prompt Flow Shift: For temporal consistency, adjust to tweak video smoothness.

Insert Products in Ecommerce Ads - NanoBanana Pro

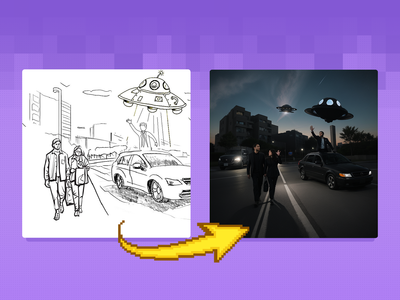

Controlnet

SD1.5

Turn your sketches into full blown scenes. Key Inputs Image reference: Use any JPG or PNG showing your subject clearly Prompt: as descriptive a prompt as possible Width & height: In pixels ControlNet Strength: The amount of adherence to the original image. Higher has more adherence. Start Percent: The point in the generation process where the control starts exerting influence. (Have it start later, to let AI imagine first.) End Percent: The point in the generation process where the control stops exerting influence. (Have it end sooner, to let AI finish it off with some variation.)

Sketch to Image

Turn your sketches into full blown scenes. Key Inputs Image reference: Use any JPG or PNG showing your subject clearly Prompt: as descriptive a prompt as possible Width & height: In pixels ControlNet Strength: The amount of adherence to the original image. Higher has more adherence. Start Percent: The point in the generation process where the control starts exerting influence. (Have it start later, to let AI imagine first.) End Percent: The point in the generation process where the control stops exerting influence. (Have it end sooner, to let AI finish it off with some variation.)

Veo 3.1 Image to Video - First Frame and Optional Last Frame

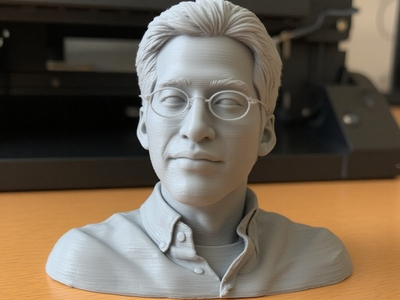

3D Print LoRA and Flux Kontext for Image to 3D Print Mockup

Character Sheet

Controlnet

Flux

Generate a character sheet using a prompt and a LoRA model of a particular person for more accurate renders. Key Inputs Load Image: Use any JPG or PNG of your pose sheet Prompt: as descriptive a prompt as possible Width & height: Optimal resolution settings are noted at 1280px x 1280px Denoise: The amount of variance in the new image. Higher has more variance. ControlNet Strength: The amount of adherence to the original image. Higher has more adherence. Start Percent: The point in the generation process where the control starts exerting influence. (Have it start later, to let AI imagine first.) End Percent: The point in the generation process where the control stops exerting influence. (Have it end sooner, to let AI finish it off with some variation.) Flux Guidance: How much influence the prompt has over the image. Higher has more guidance.

Text to Character Sheet with a reference LoRA

Generate a character sheet using a prompt and a LoRA model of a particular person for more accurate renders. Key Inputs Load Image: Use any JPG or PNG of your pose sheet Prompt: as descriptive a prompt as possible Width & height: Optimal resolution settings are noted at 1280px x 1280px Denoise: The amount of variance in the new image. Higher has more variance. ControlNet Strength: The amount of adherence to the original image. Higher has more adherence. Start Percent: The point in the generation process where the control starts exerting influence. (Have it start later, to let AI imagine first.) End Percent: The point in the generation process where the control stops exerting influence. (Have it end sooner, to let AI finish it off with some variation.) Flux Guidance: How much influence the prompt has over the image. Higher has more guidance.

Wan2.1 and ATI for Control Video Motion: Draw Your Path, Get Your Video

Wan LoRA Trainer

Seedream 4.5 Image Generation

Vace

Video

Wan

Created by @davcha on Civitai, please support the original creator! https://civitai.com/models/1674121/simple-self-forcing-wan13bvace-workflow If this is your workflow, please contact us at team@floyo.ai to claim it! Original guide from creator: This is a very simple workflow to run Self-Forcing Wan 1.3B + Vace, it only uses a single custom node, which everyone making videos should have: Kosinkadink/ComfyUI-VideoHelperSuite. Everything else is pure comfy core. You'll need to download the model of your choice from here lym00/Wan2.1-T2V-1.3B-Self-Forcing-VACE · Hugging Face, and put it inside your /path/to/models/diffusion_models folder. This workflow can be used as a very good start for experimenting. You can refer to this [2503.07598] VACE: All-in-One Video Creation and Editing for how to use Vace. You don't need to read the paper of course, the information you are interested in is mostly at the top of page 7, which I reproduce in the following: Basically, in the WanVaceToVideo node, you have 3 optional inputs: control_video, control_masks, and reference_image. control_video and control_masks are a little bit misleading. You don't have to provide a full video. You can in fact provide a variety of things to obtain various effects. For example: if you provide a single image, it's basically more or less equivalent to image2video. if you provide a sequence of images separated by empty images: img1, black, black, black, img2, black, black, black, img3, etc... then it's equivalent to interpolating all these img, filling the blacks. A special case of this one to make it clear is if you have img1, black, black, ..., black, img2, then it's equivalent to start_img, end_img to video. control_masks control where Wan should paint. Basically if wherever the mask is 1, the original image will be kept. So you can for example pad and/or mask an input image, like this: and use that image and mask as control_video and control_mask, and you'll basically do a image2video inpaint and outpaint. If you input a video in control_video, then you can control where the changes should happen in the same way, using control_mask. You'll need to set one mask per frame in the video. if you input an image preprocessed with openpose or a depthmap, you can finely control the movement in the video output. reference_image node is basically an image that you feed to Wan+Vace that serves as a reference point. For example, if you put the image of someone's face here, there's a good chance you'll get a video with that person's face.

Simple Self-Forcing Wan1.3B+Vace workflow

Created by @davcha on Civitai, please support the original creator! https://civitai.com/models/1674121/simple-self-forcing-wan13bvace-workflow If this is your workflow, please contact us at team@floyo.ai to claim it! Original guide from creator: This is a very simple workflow to run Self-Forcing Wan 1.3B + Vace, it only uses a single custom node, which everyone making videos should have: Kosinkadink/ComfyUI-VideoHelperSuite. Everything else is pure comfy core. You'll need to download the model of your choice from here lym00/Wan2.1-T2V-1.3B-Self-Forcing-VACE · Hugging Face, and put it inside your /path/to/models/diffusion_models folder. This workflow can be used as a very good start for experimenting. You can refer to this [2503.07598] VACE: All-in-One Video Creation and Editing for how to use Vace. You don't need to read the paper of course, the information you are interested in is mostly at the top of page 7, which I reproduce in the following: Basically, in the WanVaceToVideo node, you have 3 optional inputs: control_video, control_masks, and reference_image. control_video and control_masks are a little bit misleading. You don't have to provide a full video. You can in fact provide a variety of things to obtain various effects. For example: if you provide a single image, it's basically more or less equivalent to image2video. if you provide a sequence of images separated by empty images: img1, black, black, black, img2, black, black, black, img3, etc... then it's equivalent to interpolating all these img, filling the blacks. A special case of this one to make it clear is if you have img1, black, black, ..., black, img2, then it's equivalent to start_img, end_img to video. control_masks control where Wan should paint. Basically if wherever the mask is 1, the original image will be kept. So you can for example pad and/or mask an input image, like this: and use that image and mask as control_video and control_mask, and you'll basically do a image2video inpaint and outpaint. If you input a video in control_video, then you can control where the changes should happen in the same way, using control_mask. You'll need to set one mask per frame in the video. if you input an image preprocessed with openpose or a depthmap, you can finely control the movement in the video output. reference_image node is basically an image that you feed to Wan+Vace that serves as a reference point. For example, if you put the image of someone's face here, there's a good chance you'll get a video with that person's face.

ElevenLabs Text to Speech

ElevenLabs Text to Speech

VibeVoice Text to Speech Multi Speaker

Speech Multi Speaker

Wan Alpha Create Transparent Videos

Image to Video with Seedance Pro API

🔥Create Stunning 10 Second 3D Spin Shots in Seedance for Characters, Products, and Hero Scenes

Wan2.6 Text to Video

Animation

Filmography

Grok

Image2Video

Turn images into excellent video using the Grok Imagine

Grok Imagine for Image to Video

Turn images into excellent video using the Grok Imagine

Create Images Using Qwen Image Edit 2511

Flux

Image

LoRa

Upscale

Create a larger more detailed image along with an extra AI model for fine tuned guidance. Key Inputs Load Image: Use any JPG or PNG showing your subject clearly Load LoRA: Load your reference model here Prompt: as descriptive a prompt as possible Upscale by: The factor of magnification Denoise: The amount of variance in the new image. Higher has more variance.

Image Upscaler with LoRA

Create a larger more detailed image along with an extra AI model for fine tuned guidance. Key Inputs Load Image: Use any JPG or PNG showing your subject clearly Load LoRA: Load your reference model here Prompt: as descriptive a prompt as possible Upscale by: The factor of magnification Denoise: The amount of variance in the new image. Higher has more variance.

Masking

Segmentation

Video

Use a video clip and visual markers to segment/create masks of the subject or the inverse. Key Inputs Load Video: Use any Mp4 that you would like to segment or create a mask from Select subject: Use 3 green selectors to identify your subject and one red selector to identify the space outside your subject Modify markers: Shift+Click to add markers, Shift+Right Click to remove markers

Video Masking with Sam2 Comparison

Use a video clip and visual markers to segment/create masks of the subject or the inverse. Key Inputs Load Video: Use any Mp4 that you would like to segment or create a mask from Select subject: Use 3 green selectors to identify your subject and one red selector to identify the space outside your subject Modify markers: Shift+Click to add markers, Shift+Right Click to remove markers

FlatLogColor LoRA and Qwen Image Edit 2509

Qwen Image 2512 Text to Image

Wan2.2 and Bullet Time LoRA: Transform Static Shots into Product Spins

Vidu Q3 for Image to Video

Turn to images to real life

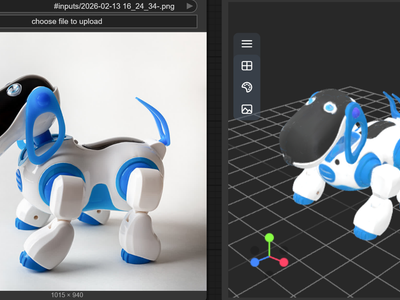

3D

3D Model

Hyper3D Rodin v2

Image to 3D

Rodin v2

Turn your images into 3D using Hyper3D Rodin v2

Hyper3D Rodin V2 for Image to 3D

Turn your images into 3D using Hyper3D Rodin v2

Tripo3D for Image to 3D

Create 3D model using Tripo3D with v2.5

Kling 3.0 Pro for Image to Video

Turn images into a video using Kling 3.0 Pro

SAM3 for Video Masking using Text

Create a video masking using SAM3 and Text only.

Grok Imagine for Text to Video

Create excellent videos using Grok Imagine for T2V

360° Character Turnaround & Sheet Workflow

Qwen Image Edit – Multi-Angle Camera View

Flux

Flux.2 Klein

Image2Image

Inpainting

LanPaint

Inpainting image using Flux.2 Klein and LanPaint

FLUX.2 Klein 9B Image Inpainting

Inpainting image using Flux.2 Klein and LanPaint

Flux

FLUX2 Klein

Photography

Text2Image

Create a high quality image using 9B model of Flux 2 Klein

FLUX.2 Klein 9B for Text to Image

Create a high quality image using 9B model of Flux 2 Klein

Animation

Filmmaking

Image2Video

Kling 2.6 Pro

Create stunning videos using Kling 2.6 Pro

Kling 2.6 Pro for Image to Video

Create stunning videos using Kling 2.6 Pro

Chatterbox Text to Speech

Text to speech workflow using Chatterbox

Studio Relighting for Composited Products

LTX 2 Retake Video for Video Editing

Create Cinematic Poster & Ad from Your Product

API

Filmography

Fimmaking

Floyo API

Image2Video

LTX 2 Fast

Image to Video using LTX 2 Fast API

LTX 2 Fast API for Image to Video

Image to Video using LTX 2 Fast API

LTX 2 Fast API for Text to Video

Text to video using LTX 2 Fast API

Animation

Filmmaking

Image2Video

LTX 2 Pro

Video Editing

Image to Video using LTX 2 Pro API

LTX 2 Pro API for Image to Video

Image to Video using LTX 2 Pro API

Wan2.1 + SCAIL for Animating Images for Movement

Change Product Shots with NanoBanana Pro

Hunyuan Video LoRA Trainer

Flux LoRA Trainer

Vertical Video Prop & Object Replacement Using Seedream + Wan 2.2

Vertical Video Background & Scene Rebuild

Veo 3.1 Image to Video

Kling Omni One Image to Video

Multiple Angle LoRA and Qwen Image Edit 2509 for Dynamic Camera View

Multiple Angle Lighting LoRA + 2511

Light Restoration LoRA + Qwen Image Edit 2509 Image to Image

Kling 2.5 Image to Video

Qwen Image Edit 2509 and Grayscale to Color LoRA

Ovi: Create a Talking Portrait

Vertical Video Scene Extension & Coverage Generator using Seedream +Wan

Vertical Video Scene Extension & Coverage Generator

Topaz Video Upscaler: Turn Everyday Videos into Crisp, High-Resolution Content for Any Platform

Wan2.1 VACE and Ditto: Artistic Makeovers for Videos

Realistic Product or Props Replacement

Kling Master 2.0 Create Engaging Video Content

Next-Level Motion from Images using MiniMax

Boost Your Creative Video: Comprehensive Solutions with Seedance Image to Video

Craft Stunning Edits Instantly with Nano Banana Edit

Wan2.2 Fun and RealismBoost LoRA for V2V

Modify the Image using InstantX Union ControlNet

Image2Video

LTX

Video

Used for image to video generation, including first frame, end frame, or other multiple key frames. Key Inputs Load Image (Start Frame): Use any JPG or PNG showing your subject clearly to start your video Load Image (End Frame): Use any JPG or PNG showing your subject clearly to act as the last part of your video. Make sure it's the same resolution as the load image. Width & height: Optimal resolution settings are noted. LTX maximum resolution is 768x512 Prompt: as descriptive a prompt as possible

Image to Video with Multiframe Control

Used for image to video generation, including first frame, end frame, or other multiple key frames. Key Inputs Load Image (Start Frame): Use any JPG or PNG showing your subject clearly to start your video Load Image (End Frame): Use any JPG or PNG showing your subject clearly to act as the last part of your video. Make sure it's the same resolution as the load image. Width & height: Optimal resolution settings are noted. LTX maximum resolution is 768x512 Prompt: as descriptive a prompt as possible

Character Sheet

Face Swap

Flux

Image

Take a character sheet and use a reference image to replace all the faces with that new person. Key Inputs Load Image: Use any JPG or PNG showing your pose sheet Load New Face: Use any JPG or PNG showing your subject clearly that you would like to swap into the pose sheet. Prompt: as descriptive a prompt as possible Width & height: Optimal resolution settings are noted at 1024px x 1024px Keep Proportion: Enable keep_proportion if you want to keep the same size with input and output Denoise: The amount of variance in the new image. Higher has more variance.

Image to Image Character Sheet Face Swap with Ace+

Take a character sheet and use a reference image to replace all the faces with that new person. Key Inputs Load Image: Use any JPG or PNG showing your pose sheet Load New Face: Use any JPG or PNG showing your subject clearly that you would like to swap into the pose sheet. Prompt: as descriptive a prompt as possible Width & height: Optimal resolution settings are noted at 1024px x 1024px Keep Proportion: Enable keep_proportion if you want to keep the same size with input and output Denoise: The amount of variance in the new image. Higher has more variance.

API

Bedrock

Nova Canvas

SDXL

Text to Image

Titan

Generate and compare images between 3 different models powered by Amazon Bedrock. Key Inputs Prompt: as descriptive a prompt as possible Models SDXL: Solid all-around performer with strong prompt adherence and wide style range Titan: Versatile model with built-in editing features and customization flexibility Nova Canvas: Quick iterations with creative flair, ideal for brainstorming and concept exploration

Amazon Bedrock - Text to Multi-Image with SDXL, Titan and Nova Canvas

Generate and compare images between 3 different models powered by Amazon Bedrock. Key Inputs Prompt: as descriptive a prompt as possible Models SDXL: Solid all-around performer with strong prompt adherence and wide style range Titan: Versatile model with built-in editing features and customization flexibility Nova Canvas: Quick iterations with creative flair, ideal for brainstorming and concept exploration

3D

Animation

Architecture

Flux

Game Development

Hunyuan 3D

Image to 3D

Upscaling

Create a 3D model from a reference image with Flux Dev texture upscaling. Key Inputs Image: Use any JPG or PNG. Load the image you want to generate a 3D asset from, if it has a background this workflow will remove it and center the subject. Prompt: as descriptive a prompt as possible Denoise: The amount of variance in the new image. Higher has more variance. Notes: If you aren’t satisfied with the initial mesh, simply cancel the workflow generation process, preferably before the process reaches the SamplerCustomAdvanced node because applying the textures to the model may take a little bit more time, and you’ll be unable to cancel the generation during that time. The seed is fixed for the mesh generation, this is so if you need to retry the texture upscale you don't need to also re-generate the mesh. If you would like to try a different seed for a better mesh, simply expand the node below and change the seed to another random number. Changing the seed could help in some cases, but ultimately the biggest factor is the input image. If the first mesh isn't showing, give it a moment, there are some additional post processing steps going on in the background for de-light/multiview.

Image to 3D with Hunyuan3D w/ Texture Upscale

Create a 3D model from a reference image with Flux Dev texture upscaling. Key Inputs Image: Use any JPG or PNG. Load the image you want to generate a 3D asset from, if it has a background this workflow will remove it and center the subject. Prompt: as descriptive a prompt as possible Denoise: The amount of variance in the new image. Higher has more variance. Notes: If you aren’t satisfied with the initial mesh, simply cancel the workflow generation process, preferably before the process reaches the SamplerCustomAdvanced node because applying the textures to the model may take a little bit more time, and you’ll be unable to cancel the generation during that time. The seed is fixed for the mesh generation, this is so if you need to retry the texture upscale you don't need to also re-generate the mesh. If you would like to try a different seed for a better mesh, simply expand the node below and change the seed to another random number. Changing the seed could help in some cases, but ultimately the biggest factor is the input image. If the first mesh isn't showing, give it a moment, there are some additional post processing steps going on in the background for de-light/multiview.

image-to-image

Lipsync

reference-image

seedream

upscaling

Video-conditioning

wan2.1_funControl

Vertical Video Lighting & Mood Shift Using Seedream + Wan

HunyuanImage 3.0 Text to Image

Kling Omni One Image Edit

Flux.2 Klein Image Expansion / Outpaint

GPT Image 1.5 Text to Image

AILab

Audio to Text

Speech to Text

STT

Transcribe

Create a text from speech using Whisper STT

Whisper STT

Create a text from speech using Whisper STT

GPT Image 1.5 for Image Editing

API

Filmmaking

Floyo API

LTX 2 Pro

Text to Video

Videography

Text to video using LTX 2 Pro API

LTX 2 Pro API for Text to Video

Text to video using LTX 2 Pro API

HunyuanVideo 1.5 for Image to Video

Create Photorealistic Packaging from Dielines

ChatterBox

Higgs

Text to Speech

TTS

VibeVoice

A workflow of TTS Audio Suite which can to use different type of audio models.

Multi Model for Voice Convesion and Text to Speech

A workflow of TTS Audio Suite which can to use different type of audio models.

Ecommerce

NanoBanana

Reference Image

Upload the outfit image to generate fashion billboard

Generate Fashion Billboard Using Outfit Image

Upload the outfit image to generate fashion billboard

Camera Control

Image2Vid

Qwen Image Edit 2511

Vid2Vid

Wan2.6

Using witness cameras to recreate additional shots that were not captured by principal photography

Camera Angle Creation using Image2Vid

Using witness cameras to recreate additional shots that were not captured by principal photography

Image2Image

Image2Video

Kling Omni One Video Edit

Qwen Image Edit 2511

Editing the character in the video without losing quality using video to video workflow

Change in the Character using Image2Vid

Editing the character in the video without losing quality using video to video workflow

Image2Image

Image Edit

Qwen Image Edit 2511

Create different angle of the image using Qwen Image Edit 2511 and with special node

Camera Angle Control with QwenMultiAngle

Create different angle of the image using Qwen Image Edit 2511 and with special node

Upscaling Images to 4k using Qwen Image Edit 2511

Upscale to 2k to 4k

Animation

Filmography

Image2Video

LTX 2

Open Source

A workflow for ltx 2 image to video using distilled model

LTX 2 19B Fast for Image to Video

A workflow for ltx 2 image to video using distilled model

Video Detailer using LTX 2 Vid2Vid

It can enhance the detail of the video

Flimography

LTX 2 Pro

Open Source

Text2Video

Videography

An open source LTX 2 Pro for Text to Video

LTX 2 19B Pro for Text to Video

An open source LTX 2 Pro for Text to Video

Image2Video

Kling Omni One

Next Scene LoRA

Qwen Image Edit 2511

Reference2Video

Creating a reshoot for a character

Character Reshoot using Qwen Edit 2511 + Kling O1

Creating a reshoot for a character

Create Magazine Cover & Package Design

Insert Product into Existing Ad

Clothing & Accessories Replacement

GPT-Image 1.5

Image2Image

Image2Video

Kling 2.6

Text2Image

VLM

Create a high quality demo for your products using Kling 2.6 Image to Video

Create Product Demo from Concept to Video

Create a high quality demo for your products using Kling 2.6 Image to Video

Static Watermark Remover

3D Products with Logo - Wan2.6 Image to Video

Z-Image Turbo + Chord Image to PBR Material

Kandinsky for Text to Video

Creating excellent videos using Kandinsky

Isometric Miniatures from a Selfie

Qwen Image Edit – Portrait Light Migration

Flux

Flux.2 Klein

Image Outpainting

Outpaint image using Flux 2 Klein 4B using LanPaint and Outpaint LoRA

FLUX.2 Klein 4B for Image Outpainting

Outpaint image using Flux 2 Klein 4B using LanPaint and Outpaint LoRA

Flux

Flux.2 Klein 4B

Image2Image

Create sprite sheet for game characters in using flux 2 Klein 4B

FLUX.2 Klein 4B for Text to Sprite Sheet

Create sprite sheet for game characters in using flux 2 Klein 4B

Floyo API

Image2Video

PixVerse

You can swap object,, character and background using PixVerse

Pixverse Swap for Image to Video Swap

You can swap object,, character and background using PixVerse

Qwen Thinking Prompt Refiner

Qwen Image Max for Text to Image

Create a high quality using the flagship model of Qwen Image

API

Image2Image

Image Editing

Qwen Image Max Edit

Editing images using the flagship model of Qwen Image Max Edit

Qwen Image Max Edit for Editing Images

Editing images using the flagship model of Qwen Image Max Edit

Grok Imagine for Text to Image

Create cool images using Grok Imagine

Grok Imagine for Imagine Edit

Edit images using Grok Imagine

SAM2

Segment Anything 2

video2video

Video Mask

Create a video mark frame by frame using Segment Anything 2

Segment Anything 2 for Creating Video Mask

Create a video mark frame by frame using Segment Anything 2

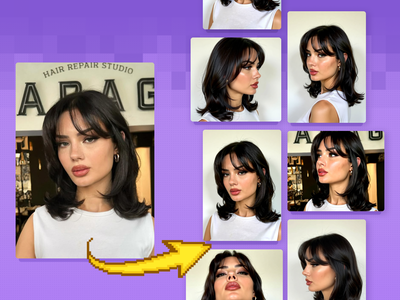

Single Image to Multiple Consistent Shots

SAM3 for Video Masking using Points

Create a video masking using SAM3 and Points only.

Image Edit

Qwen

Qwen Image Edit 2511

Relighting

Relighting images using Qwen multiangle light node

Qwen Multiangle Light with Qwen Image Edit 2511

Relighting images using Qwen multiangle light node

Kling 3.0 Pro for Text to Video

Create videos using Kling 3.0

Minimax Speech 2.8 HD for Text to Speech

Create realistic speech using Minimax speech 2.8

Image2Image

Image Editing

Seedream 4.5

Text2Image

An all purpose Seedream 4.5 for image generation

Seedream 4.5 Unified for Image Generation

An all purpose Seedream 4.5 for image generation

LTX 2.0 – Prompting & Dynamic Camera Movement

Vidu Q3 for Text to Video

Create good videos with Vidu Q3

Meshy v6 Text to 3D Model

Create a 3D using Meshy v6 text to model

Meshy v6 for Image to 3D Model

Create a 3D model from Image using Meshy v6

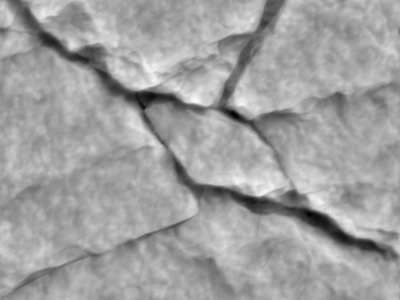

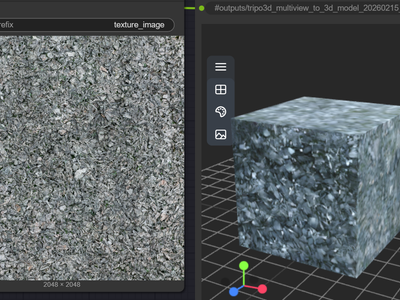

3D

Chord

Game Design

PBR Material

Text to 3D

Ubisoft

Create a 3D Game Material Asset using Chord Model from Ubisoft

Chord for PBR Material Generation using Text to 3D

Create a 3D Game Material Asset using Chord Model from Ubisoft

Kling 3.0 Standard for Text to Video

Create videos using Kling 3.0 Standard

Kling 3.0 Standard for Image to Video

Animate the images using Kling 3.0 Standard

Image Editing

Qwen

Qwen Image Edit 2511

VNCCS Utils

Create different position of person using VNCCS custom node and Qwen Image Edit 2511

Qwen Image Edit 2511 and VNCCS Utils - Visual Pose

Create different position of person using VNCCS custom node and Qwen Image Edit 2511

Clothes Swap

Flux

Flux.2 Klein

Image Editing

LanPaint

Replace clothes using the Flux.2 Klein 4B

FLUX.2 Klein 4B and LanPaint for Swap Clothes

Replace clothes using the Flux.2 Klein 4B

ComfySketch for Creating Images

Draw cool images using comfysketch

Kling 3.0 for Video Generation

Coming soon page for Kling 3.0

Z-Image Base

It will come out soon.

SopranoTTS for Text to Speech

Turn speech using Soprano TTS

Enjoy Effortless Image-to-Image Transformation to Jaw-Dropping Photorealism using Anime2Reality LoRA

Krea Wan 14B Video to Video

Wan 2.6 Video Generation

MiniMax Text-to-Video will Bring Your Creative Concepts to Life with Realistic Motion

Dreamina 3.1 Text to Image

ACE-Step 1.5 for Music Generation

Create stunning music using ACE Step 1.5

Kling Omni 1 Reference to Video

Create a Fashion Shoot - NanoBanana + Kling

Ovis Text to Image

_1758863167371.webp?width=400&height=300&quality=80&resize=cover)

_1762434919692.gif?width=400&height=300&quality=80&resize=cover)

_1767784624270.png?width=400&height=300&quality=80&resize=cover)

_1758870047277.webp?width=400&height=300&quality=80&resize=cover)

_1767601871879.png?width=400&height=300&quality=80&resize=cover)

_1758870606082.webp?width=400&height=300&quality=80&resize=cover)

_1764582642632.webp?width=400&height=300&quality=80&resize=cover)

_1767112903535.webp?width=400&height=300&quality=80&resize=cover)

_1761821508019.webp?width=400&height=300&quality=80&resize=cover)

_1762345216109.gif?width=400&height=300&quality=80&resize=cover)

_1758800553742.webp?width=400&height=300&quality=80&resize=cover)

_1766997541061.png?width=400&height=300&quality=80&resize=cover)

_1767704595216.png?width=400&height=300&quality=80&resize=cover)

_1766992060792.png?width=400&height=300&quality=80&resize=cover)

_1766051317506.webp?width=400&height=300&quality=80&resize=cover)

_1764579820037.webp?width=400&height=300&quality=80&resize=cover)

_1764570564795.webp?width=400&height=300&quality=80&resize=cover)

_1762515700716.gif?width=400&height=300&quality=80&resize=cover)

_1763538074182.webp?width=400&height=300&quality=80&resize=cover)

_1764320932433.webp?width=400&height=300&quality=80&resize=cover)

_1768210693794.png?width=400&height=300&quality=80&resize=cover)

_1768981819654.webp?width=400&height=300&quality=80&resize=cover)

%20(2400%20x%201080%20px)%20(2430%20x%201080%20px)%20(10)_1771484497818.png?width=400&height=300&quality=80&resize=cover)

_1769947817081.jpg?width=400&height=300&quality=80&resize=cover)

_1769514490280.jpg?width=400&height=300&quality=80&resize=cover)

_1763991450209.png?width=400&height=300&quality=80&resize=cover)

_1762437195803.gif?width=400&height=300&quality=80&resize=cover)

_1767292063423.webp?width=400&height=300&quality=80&resize=cover)