Nano Banana Pro Text-to-Image: Gemini 3 Pro

API

Floyo API

Image2Image

Nano Banana Pro

16

7.2k

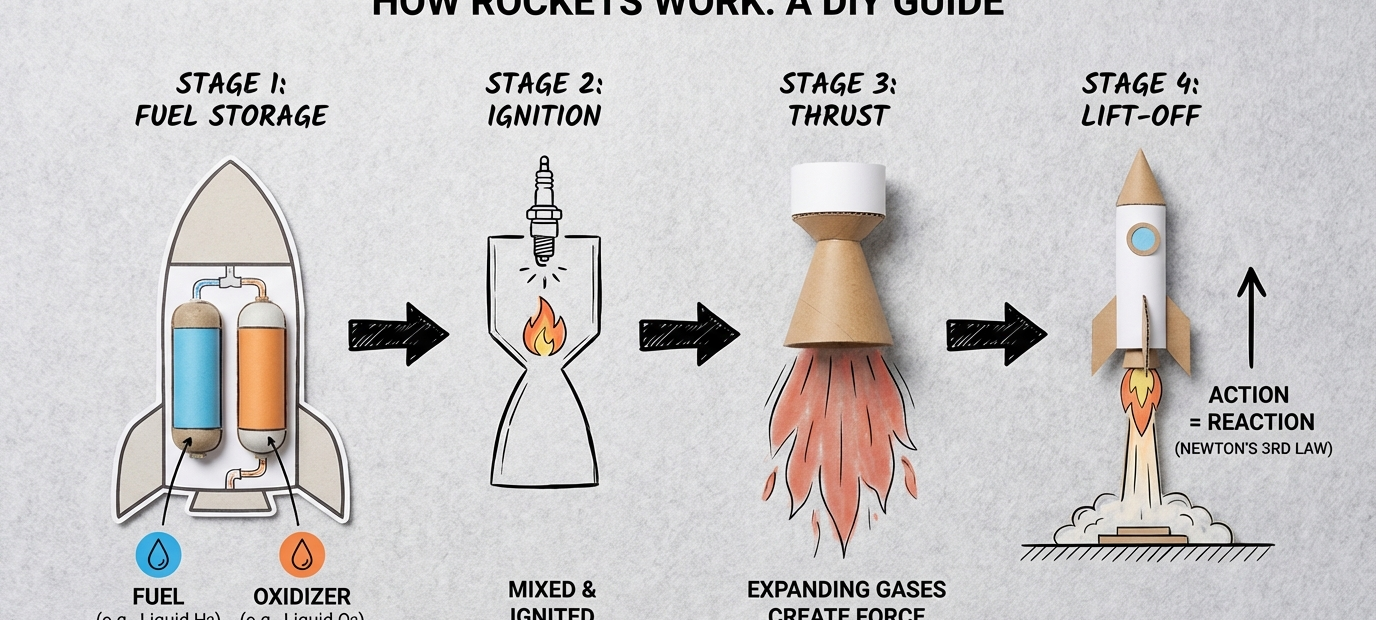

Google just released Nano Banana Pro, and honestly, it's a pretty big step up from the original Nano Banana. The main thing? It can actually put legible text in images now. Like, real text that you can read, not the garbled nonsense most AI models spit out.

If you've been generating thumbnails, storyboards, or mockups and manually adding text afterward in Photoshop or Figma, this changes things.

The text rendering thing is legitimately useful

Most image models struggle with text. You ask for a poster with "SUMMER SALE" and get "SUMMRE SAALE" or some weird symbols. Nano Banana Pro actually gets it right most of the time.

What this means for you

You can generate YouTube thumbnails with actual readable titles, create product mockups with proper labels, or make infographics without needing to layer text separately. It works in multiple languages too, which is wild if you're creating content for different markets.

It understands what you're actually asking for

This is built on Gemini 3 Pro, which means it has better reasoning than your typical image model. If you say "create a diagram showing how photosynthesis works," it doesn't just make something that looks science-y. It pulls from actual knowledge about photosynthesis.

The model can connect to Google Search for real-time info. Asked it to make a weather infographic for my city, and it pulled current data automatically. That's pretty neat for content that needs to be up-to-date.

Practical examples that worked

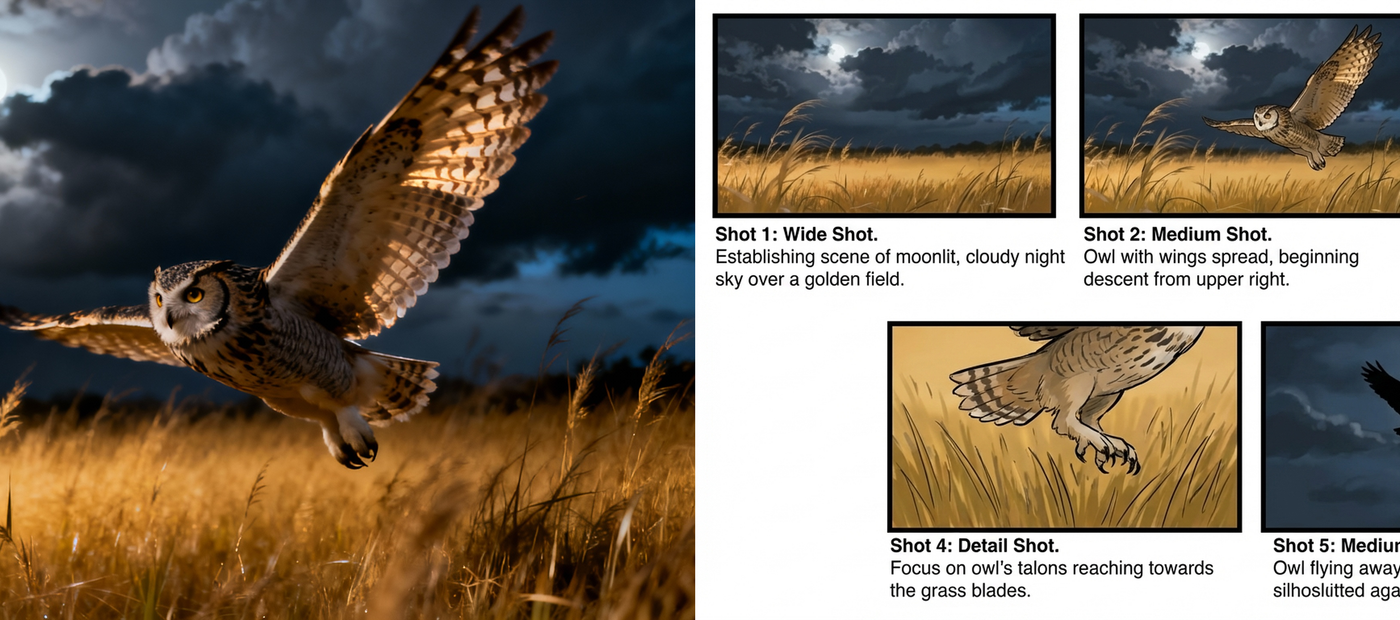

Film pre-production: Created shot lists with proper cinematography terminology baked into each frame. Made a 6-panel storyboard labels that actually looked like production storyboards, not comic panels

Ecommerce product mockups: Generated phone case designs with brand logos that wrapped correctly around the edges, maintaining perspective. Also made product packaging with ingredient lists, barcodes, and legal disclaimers in readable 8pt font

Film location scouting concepts: Asked it to visualize specific locations based on script descriptions ("abandoned warehouse, industrial district, late afternoon, overcast lighting"). Got frames that matched the mood we needed to pitch to the director

Flash sale campaign adapts: Created a "30% OFF - 24 HOURS" flash sale visual, then regenerated it in different aspect ratios—16:9 for email headers, 1:1 for Instagram posts, 9:16 for Stories, and 728x90 for website banners. All kept the same branding and text legibility across formats. Saved hours compared to resizing in Photoshop

Fashion lifestyle shots with multiple inputs: Uploaded 8 different images (model poses, clothing items, background locations, styling references) and blended them into cohesive lifestyle shots. The model maintained the same person's features across different outfits and settings, which is usually impossible without actual photo shoots

4K product detail shots: Generated extreme close-ups at 4K resolution for things like watch mechanisms, fabric textures, and jewelry details. Tested it with an insect macro shot request (butterfly wing patterns) and the detail level was honestly impressive—individual scales visible, color gradients accurate. Useful for hero images that need to look premium

Multilingual ecommerce assets: Same product label translated into Spanish, Japanese, and German without breaking the layout. The nutrition facts table stayed properly formatted across all versions

The creative controls are more precise

You can get specific about camera angles, lighting, and composition. Instead of saying "make it look cinematic," you can say "low angle shot with f/1.8 shallow depth of field, golden hour backlighting."

For video creators, this is huge for storyboarding. You can spec out exact shots with proper cinematography language and get frames that match your vision.

Lighting control example

I asked it to take a portrait and apply "chiaroscuro effect with directional light from above left, deep shadows, only eyes and cheekbones illuminated." It actually understood and executed that. Before, you'd need to describe lighting in vaguer terms and hope for the best.

Image blending is surprisingly capable

You can upload up to 14 images and maintain consistency across them. This is useful if you're creating content with recurring characters or trying to maintain a consistent brand aesthetic across different scenes.

Tested this by feeding it character designs from different angles and asking it to create a new scene. The character actually stayed consistent, which usually requires multiple regenerations with other tools.

Read more

Nodes & Models

NanoBananaProUnified_floyo

WorkflowGraphics

LoadImage

SaveImage

PreviewImage

ImageConcanate

ImageConcanate

Google just released Nano Banana Pro, and honestly, it's a pretty big step up from the original Nano Banana. The main thing? It can actually put legible text in images now. Like, real text that you can read, not the garbled nonsense most AI models spit out.

If you've been generating thumbnails, storyboards, or mockups and manually adding text afterward in Photoshop or Figma, this changes things.

The text rendering thing is legitimately useful

Most image models struggle with text. You ask for a poster with "SUMMER SALE" and get "SUMMRE SAALE" or some weird symbols. Nano Banana Pro actually gets it right most of the time.

What this means for you

You can generate YouTube thumbnails with actual readable titles, create product mockups with proper labels, or make infographics without needing to layer text separately. It works in multiple languages too, which is wild if you're creating content for different markets.

It understands what you're actually asking for

This is built on Gemini 3 Pro, which means it has better reasoning than your typical image model. If you say "create a diagram showing how photosynthesis works," it doesn't just make something that looks science-y. It pulls from actual knowledge about photosynthesis.

The model can connect to Google Search for real-time info. Asked it to make a weather infographic for my city, and it pulled current data automatically. That's pretty neat for content that needs to be up-to-date.

Practical examples that worked

Film pre-production: Created shot lists with proper cinematography terminology baked into each frame. Made a 6-panel storyboard labels that actually looked like production storyboards, not comic panels

Ecommerce product mockups: Generated phone case designs with brand logos that wrapped correctly around the edges, maintaining perspective. Also made product packaging with ingredient lists, barcodes, and legal disclaimers in readable 8pt font

Film location scouting concepts: Asked it to visualize specific locations based on script descriptions ("abandoned warehouse, industrial district, late afternoon, overcast lighting"). Got frames that matched the mood we needed to pitch to the director

Flash sale campaign adapts: Created a "30% OFF - 24 HOURS" flash sale visual, then regenerated it in different aspect ratios—16:9 for email headers, 1:1 for Instagram posts, 9:16 for Stories, and 728x90 for website banners. All kept the same branding and text legibility across formats. Saved hours compared to resizing in Photoshop

Fashion lifestyle shots with multiple inputs: Uploaded 8 different images (model poses, clothing items, background locations, styling references) and blended them into cohesive lifestyle shots. The model maintained the same person's features across different outfits and settings, which is usually impossible without actual photo shoots

4K product detail shots: Generated extreme close-ups at 4K resolution for things like watch mechanisms, fabric textures, and jewelry details. Tested it with an insect macro shot request (butterfly wing patterns) and the detail level was honestly impressive—individual scales visible, color gradients accurate. Useful for hero images that need to look premium

Multilingual ecommerce assets: Same product label translated into Spanish, Japanese, and German without breaking the layout. The nutrition facts table stayed properly formatted across all versions

The creative controls are more precise

You can get specific about camera angles, lighting, and composition. Instead of saying "make it look cinematic," you can say "low angle shot with f/1.8 shallow depth of field, golden hour backlighting."

For video creators, this is huge for storyboarding. You can spec out exact shots with proper cinematography language and get frames that match your vision.

Lighting control example

I asked it to take a portrait and apply "chiaroscuro effect with directional light from above left, deep shadows, only eyes and cheekbones illuminated." It actually understood and executed that. Before, you'd need to describe lighting in vaguer terms and hope for the best.

Image blending is surprisingly capable

You can upload up to 14 images and maintain consistency across them. This is useful if you're creating content with recurring characters or trying to maintain a consistent brand aesthetic across different scenes.

Tested this by feeding it character designs from different angles and asking it to create a new scene. The character actually stayed consistent, which usually requires multiple regenerations with other tools.

Read more